Debugging Docker Container Networking Issues with nsenter

Docker is a good thing, we all know it. Its advantage is “isolation”. All dependencies, listening network ports, and garbage files generated by running are isolated in the Docker container. Application data, logs, and service ports are exposed in a unified form by Docker, which is simple and easy to maintain. .

But its shortcoming is also “isolation”. In the production environment, we certainly think that the cleanest environment is the best, but once some bugs are exposed, we need to operate the services in the container, such as writing Python, modifying database content, and debugging service connectivity, it’s time for a headache. In the container, such luxurious tools as dig, netstat are naturally not to be expected, and some containers even contain wget, netstat =“9”/> None of the most basic command-line tools. How to do it?

Generally speaking, we have two options. One is to expose the service port and connect the exposed port outside the container for operation. The second is to install the debugging tools and operating environment we need in the container, or bind mount the necessary tools, and operate inside the container. However, both methods have advantages and disadvantages. So today, let’s try ** third option **——use the nsenter tool to let the program on the host machine “borrow” the running context of the Docker container1 (mainly the network and process namespace), break the boundary between inside and outside the container, and facilitate developers to use the tools on the host machine to quickly solve problems.

At the same time, this article will also share a small script I wrote, which elegantly solves the problem of ** Container intranet domain name resolution ** through a special mechanism provided by our host glibc. I can guarantee that for this problem, mine is definitely the second best solution in the Eastern Hemisphere.

Existing Problems

We’ve all been using Docker for years, and seeing someone here already has questions. Isn’t it just a Docker container, docker run -p 8001:80, no matter if it is a web or something, port mapping is enough, why is it not fragrant?

Of course it smells good. But it can only be cool for a while, not forever. On the following host, there are 16 web services alone. In fact, the web services are fine. We can also use traefik to manage them in a unified way, and manage the back-end service mapping through different domain names. In addition, different services contain different dependencies. Through docker-compose, different services are built in an isolated internal network. Postgres:5432 in the internal network of service A, the database connected to and postgres of service B: 5432 is of course different. All exposure will have a great impact on security, isolation, and management difficulty. Let’s talk about the port number. What if the default port is maliciously scanned? What should I do if the port number conflicts? Just write a port number, who can remember the ports corresponding to the twenty or so services? If it is a public network VPS, this problem is even greater. Moreover, for services such as ES and HDFS that rely on the host IP to ensure normal functions, * master node * indicates that when the client connects to * slave node *, an intranet IP is provided to the client. In this case, even if the port is mapped, what is the use?

The above is aimed at * solution one * mentioned in the preface, now let’s talk about * solution two *. The problem with this approach is even more obvious. ** You never know, how wonderful the next docker image you encounter will be **. Not all containers have package managers such as apt-get, apk, etc. Some containers do not even have bash, busybox, or even libc. Even if the configured tool directory is bind mounted, whether it can run is a problem. From a development point of view, I use VSCode the most every day. If you just connect to a Linux server to write code, VSCode Remote is a good choice, but if you install all the dependent packages into the container, Even if the files inside the container can be exposed, but without the addition of Language Server, code completion, API documentation, and syntax analysis, then VSCode can only be a bare editor, which will greatly improve work efficiency Impact.

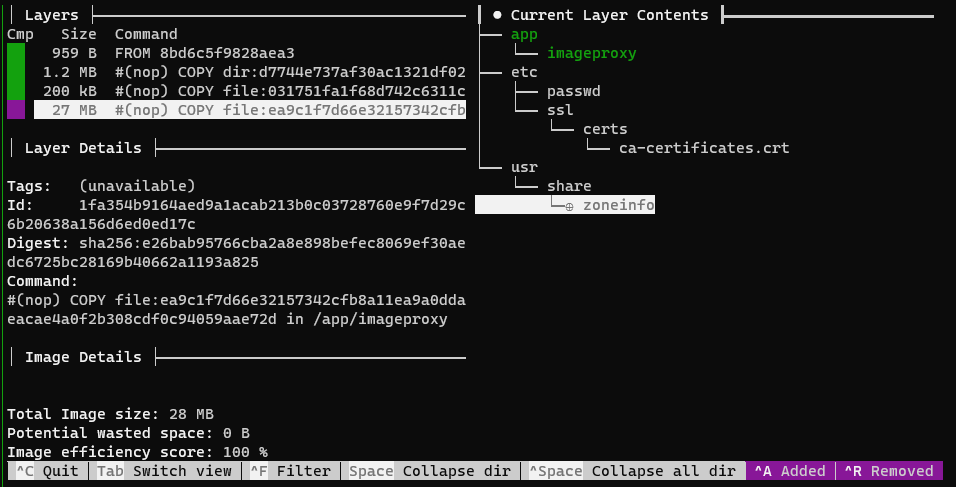

For example, the screenshot below is the content of the willnorris/imageproxy mirror 2. It can be seen that in addition to the program body /app/imageproxy, there are only CA preset certificate list, passwd file and time zone database. There is no libc, no package manager, not even busybox, and therefore no shell.

The secret here is a line in the Dockerfile, FROM scratch this special usage 3. We don’t go into the details of the principle here. We just need to know that when developers are complacent about the image volume they build, there are tears of bitterness from users behind them.

Personally, I like to use Python very much, but for container environments, especially for other people’s containers, Python is very cumbersome. Install Python, install pip, and then install the required libraries through pip. Some native packages also need to rely on gcc. For containers of different bases, we have to adapt to the situation… Should we have a once-and-for-all method?

What is a namespace

namespace 4 is a system resource isolation mechanism provided by the Linux kernel. It is the cornerstone on which Docker can realize its functions. The namespace mechanism provides isolation of 8 kinds of system resources, among which we are most concerned about the changes of the network (Network) namespace and mount (Mount) namespace in different process contexts. Docker isolates the network interface and file system visible to the process in the container from the host through these two namespaces. The network interfaces mentioned here include not only virtual bridges such as br-XXXXXX and vethXXXXXXX@ifXXX created by docker, but also local connection loopbacks, namely 127.0.0.1.

nsenter example

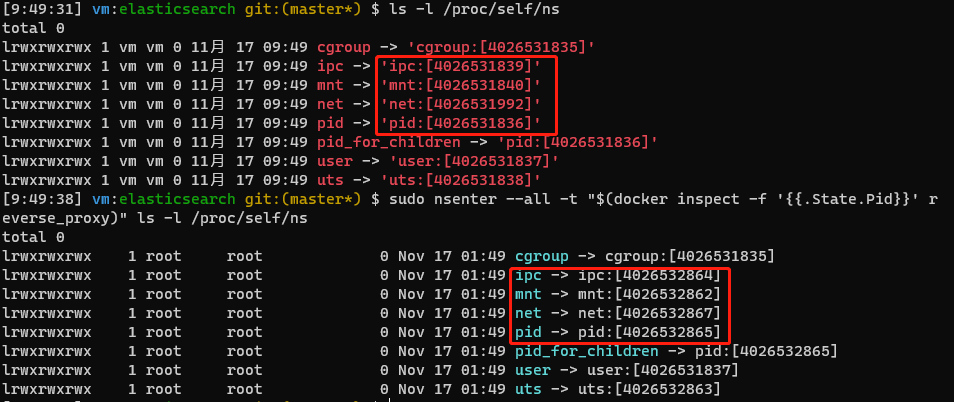

Let’s use the following example to demonstrate what changes will occur to the process after changing the process namespace through the nsenter 5 command. From the perspective of the host machine, for each process, its namespace information can be represented by the files under the /proc/<PID>/ns/ directory in the proc file system, and each namespace is represented as a file descriptor. After calling the command using nsenter, we can see that multiple namespaces of the ls process have indeed changed. The specific performance is the change of id in the square brackets behind. At the same time, this id will not change with multiple executions of the command.

Use nsenter to access the container intranet

From the manpage 5 of nsenter, we can know that we only need to know the PID of the target process to enter its namespace through nsenter. However, it should also be noted that users with access rights are restricted by the capabilities mechanism 6 of Linux (the same as the access restrictions of the Linux file system). Generally speaking, only root users or users with CAP_DAC_OVERRIDE permission can access the /proc/<PID>/ns/ directory of other users. The owner of this directory is the same as the owner of the process corresponding to the PID in the path. As long as we use the sudo command to run nsenter, we don’t need to worry about these problems.

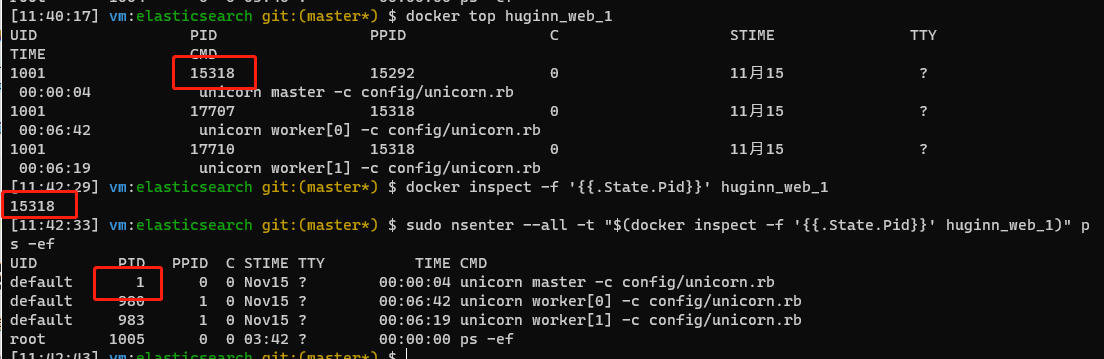

How to get this PID? It is definitely impossible to run such commands as ps from inside the container. From the inside of the container, the PID must be 1. But outside the container, the docker inspect command can be used to obtain the corresponding PID of the process with PID 1 in a container on the host.

docker inspect -f '{{.State.Pid}}' $CONTAINER_NAME

String these commands together, and we can verify the effect.

sudo nsenter --all -t "$(docker inspect -f '{{.State.Pid}}' "$CONTAINER_NAME")" $COMMAND

Disadvantages of nsenter access method

It should be noted that in the above example, I am running nsenter --all, and the ** all namespaces ** of the process in the container is given to the command we are about to run, which includes mount namespace. This means that when the process is loaded, all required files and function libraries will be loaded from ** inside the container **, so in the above example, I actually run the command, not on the host machine, which is not in line with the purpose of this article-we just don’t want to change the container to do this.

However, all process management tools, including htop, ps, etc., are dependent on the contents of the /proc directory. Therefore, if you want to use such tools, you can only use the other methods mentioned in the preface. If it were me, I would probably statically compile a htop or busybox, and then enter docker cp.

Therefore, what can be done with nsenter is mainly intranet penetration. For the management of processes in the container and the modification of files, it may be necessary to combine other methods.

Example of container intranet penetration

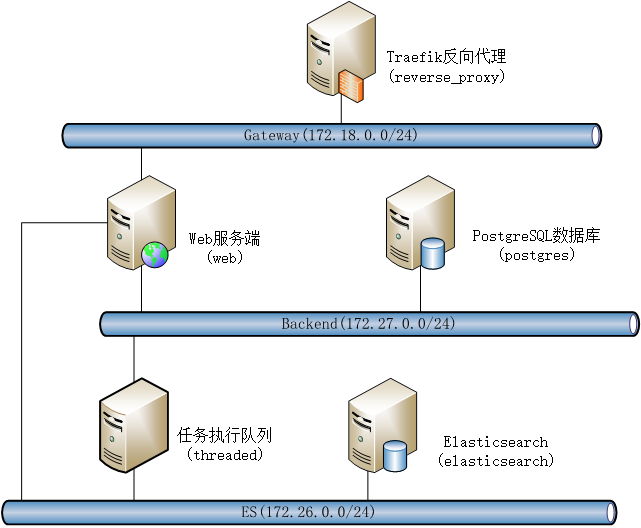

Let’s take a daily operation as an example to explain step by step how to easily and quickly connect to the Docker container network outside the container and perform service maintenance. This example uses compose file to build a Huginn service, and its network topology is as follows. In the web container, each service in the intranet can be accessed through the container name + port of each service, such as postgres:5432, elasticsearch:9200.

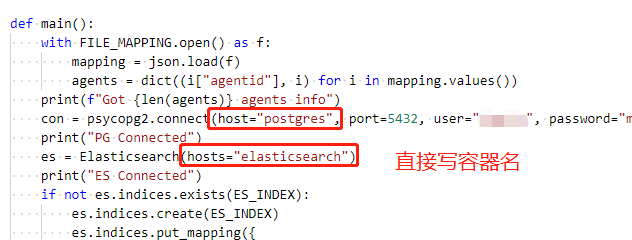

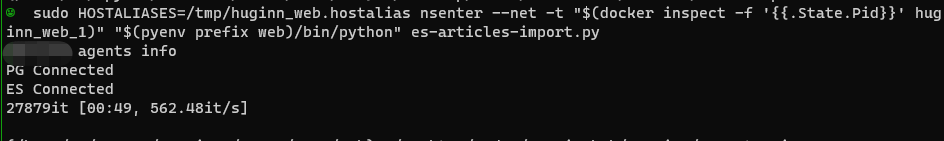

In this example, I wrote a Python script to retrieve data from the PostgreSQL database and output it to ElasticSearch. There are two containers connected to the PG database network (backend) and the ES database network (es). Here, we choose The web container is targeted by nsenter. The Python library psycopg2 for operating PG is a Python module written in pure C language. It depends on the gcc compiler during installation, so I installed it in a pyenv virtual environment on the host.

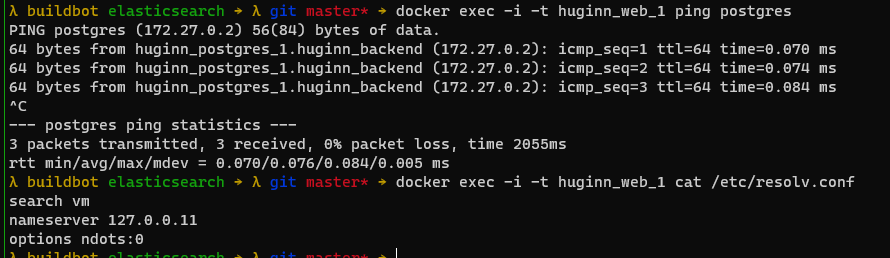

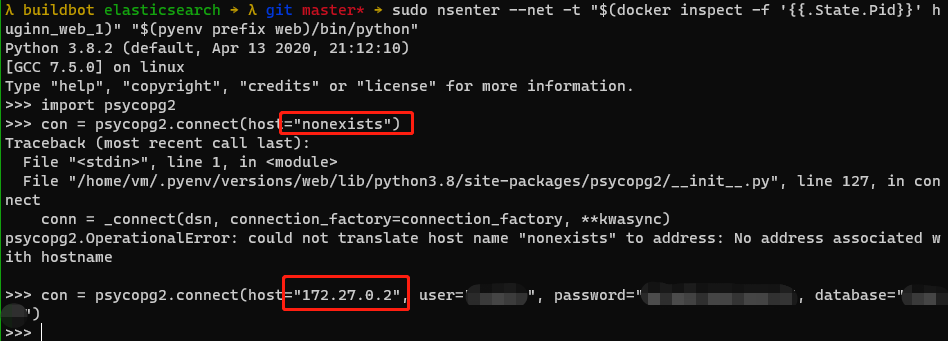

The content of the code is to process the data in the PG and store it in the ES, which will not be described in detail here. Our general idea is to use nsenter to enable the Python process to connect to the database service of the container intranet. Then, when configuring the server connection in the code, it is still necessary to specify an IP address of the container intranet. Where can I find this address?

It can be seen that the address of the PG database is 172.27.0.2, we can use the following command to invoke python in pyenv to verify whether the psycopg2 installed on the host can connect to the intranet database.

sudo nsenter --net -t "$(docker inspect -f '{{.State.Pid}}' huginn_web_1)" "$(pyenv prefix web)/bin/python"

Through comparison, we can find that if the connection fails, psycopg2 will throw an exception after the connect command is executed, so this IP is available.

But there is still a problem, how can the IP be hardcoded in the code? This is so ugly. The network segment of the docker network is reset after docker-compose down. After the service is restarted, neither the IP nor the network segment can be guaranteed to be the same as before. We need to think of a way to make the DNS resolution rules in the container also apply to programs outside our container.

Tips for DNS resolution in container intranet

In the previous section, we checked the content of /etc/resolv.conf in the container, and found that the DNS used to resolve the domain name in the container is 127.0.0.11, which is a DNS embedded in Docker Service, its specific implementation can be found in an answer 7 on Stackoverflow. But we can’t use this DNS address directly, because even Docker itself doesn’t have a better way to override the execution process of domain name resolution, so we can only secretly mount it in the container namespace to overwrite /etc/resolv.conf content. To sum up, there are only a few ways to modify the DNS of a single process.

- Create a new mount namespace and overwrite that file with a custom resolv.conf.

- Modify the resolv.conf file in the system.

- Hook off fopen8 or getaddrinfo/gethostbyname9 in libc.

Still not elegant. However, if method 3 can be realized, it should be simpler in operation than the method I mentioned below, and I will try again later.

In this case, we can find another way, using the HOSTALIASES mechanism 910 11 that comes with glibc to construct a list of domain name aliases similar to the hosts file, and then pass the name HOSTALIASES The environment variable is passed to the target process, so that the application on the host machine can resolve the IP and domain inside the container.

As for the domain name alias list, we can use nsenter in conjunction with the dig command to query in batches from the container, and finally generate a file. For this requirement I wrote the following small script.

#!/bin/bash

CONTAINER="$1"

PID="$(docker inspect -f '{{.State.Pid}}' "$CONTAINER")"

PROVIDER="xip.io"

# PROVIDER="traefik.me"

if [ -z "$PID" ]; then

>&2 echo "usage: $0 <container name>"

exit 1

fi

while IFS='' read -r line; do

IP="$(sudo nsenter --net -t "$PID" dig +short $line @127.0.0.11)"

[ -z "$IP" ] && echo "wtf? I get nothing for $line"

echo "$line $IP.$PROVIDER"

done

As for the method of use, you only need to pass the domain name to be resolved to this script through stdin, specify the name of the target container as the running parameter, and save its output.

$ ./hostalias.sh huginn_web_1 > /tmp/huginn_web.hostalias <<EOF

postgres

elasticsearch

EOF

$ cat /tmp/huginn_web.hostalias

postgres 172.27.0.2.traefik.me

elasticsearch 172.26.0.3.traefik.me

One thing to note is that the mechanism of HOSTALIASES is to convert ** query for a domain name ** to ** query for another domain name **, which is ** different from the hosts file **. Therefore, in a line of the file, the two fields before and after the space are ** Must be a domain name **. Therefore, we need a public pan-domain name resolution service to provide us with the function of transferring any IP to domain name[^ha-sof2]. Several useful services I know include xip.io and traefik.me. The effect of this analysis service is obvious from the above example, and you can also click on their website to view the description.

With this HOSTALIASES file, we can directly write the docker intranet domain name such as postgres or elasticsearch into the script, Then let it convert the IP by itself through the mapping relationship of this file. Never need to care about IP again.

As for the parameter configuration and operation of the final script, it becomes unpretentious and boring.

epilogue

As I mentioned earlier, this is the second best solution in the Eastern Hemisphere? right. Originally I wrote * best use *. But in the process of writing the article, after summarizing and thinking, I think, insert a hook through LD_PRELOAD, modify resolv.conf to change DNS to 127.0.0.11, and then cooperate with nsenter It should be the most trouble-free way. After all, using HOSTALIAS requires reconfiguration for each docker network. No matter how convenient it is to use my script, I still need to enter an extra line of commands after all, but it’s okay for lazy people. These workarounds are already far more convenient than what we’re used to.

cover picture:

A slightly more normal cover image:

-

The underlying technology - Docker overview | Docker Documentation https://docs.docker.com/get-started/overview/#the-underlying-technology ↩︎

-

imageproxy/Dockerfile at main willnorris/imageproxy https://github.com/willnorris/imageproxy/blob/main/Dockerfile ↩︎

-

Create a simple parent image using scratch - Create a base image | Docker Documentation https://docs.docker.com/develop/develop-images/baseimages/#create-a-simple-parent-image-using-scratch ↩︎

-

namespaces - overview of Linux namespaces - Miscellaneous https://www.mankier.com/7/namespaces ↩︎

-

nsenter - run program in different namespaces - man page https://www.mankier.com/1/nsenter ↩︎ ↩︎

-

capabilities - overview of Linux capabilities - man page https://www.mankier.com/7/capabilities ↩︎

-

how does Docker Embedded DNS resolver work? - Stack Overflow https://stackoverflow.com/a/50730336/1043209 ↩︎

-

how to change a file’s content for a specific process only? - Unix & Linux Stack Exchange https://unix.stackexchange.com/a/361312/264704 ↩︎

-

HOSTALIASES https://blog.tremily.us/posts/HOSTALIASES/ ↩︎ ↩︎

-

hostname - hostname resolution description - Miscellaneous https://www.mankier.com/7/hostname ↩︎

-

Overriding DNS entries per process - Unix & Linux Stack Exchange https://unix.stackexchange.com/a/304865/264704[^ha-sof2]: hosts - Hostaliases file with an IP address - Unix & Linux Stack Exchange https://unix.stackexchange.com/a/226318/264704 ↩︎