Bypass Cloudflare Anti-scraping with Cyotek WebCopy + Fiddler

foreword

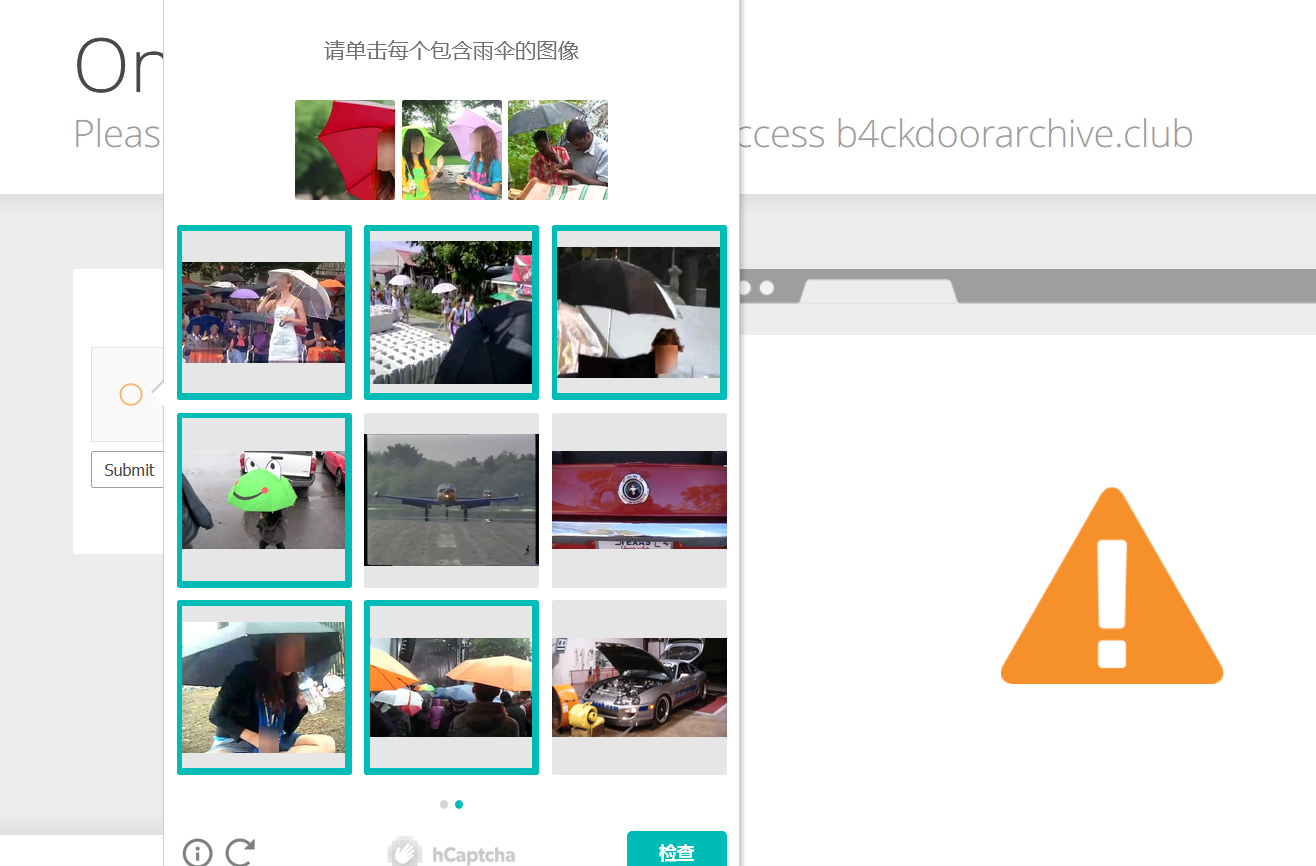

As a CDN service provider, Cloudflare has the most sense of presence (and the most offensive time) when it pops up its DDoS protection page when browsing the website. Under normal circumstances, it’s okay, at most, like the page in the picture below, it will stay for a while. In some special network environments, or when performing some special operations (such as the crawler we are going to do in this article), Cloudflare will force users to enter a graphic verification code, and recently switched from Google’s reCaptcha to its own hCaptcha[^hcaptcha ], the reason is that reCaptcha asks for money. After the update, some open source Cloudflare anti-crawler solutions 123 are all cold, and the author of the NodeJS library 2 directly expressed abandonment and set the project’s Github warehouse as an archive mode too.

But we can’t surrender if the open source project surrenders, work needs wow. Combining the advantages and disadvantages and characteristics of each crawler tool, and considering that the author himself is lazy—he tends to choose tools with a GUI interface, he finally decided to use Cyotek WebCopy plus Fiddler as the crawler implementation solution. The former is responsible for task scheduling, and the latter is responsible for key requests. Parameters, preprocessing and postprocessing of returned content.

This article references the resource sitehttps://b4ckdoorarchive.club/HELL-ARCHIVE As an example, it demonstrates how to use Cyotek WebCopy to quickly and easily create a crawler task, and control the crawler’s request and return behavior through Fiddler scripts, and crawl a resource website protected by Cloudflare in a semi-automatic manner.

Technology Selection

I know, if I saw this article by myself, I would immediately pick up the keyboard and start BB after reading the above paragraph, why not use xxxxx and xxxxx? Below I will list some similar alternatives that I know, and talk about the advantages of these tools and the reasons for their final rejection under the specific needs of this article.

To make a crawler we need two functional components. One is task scheduling, which is responsible for website directory scanning, triggering of web requests, organization and storage of downloaded files. The second is request construction, here we refer to:* Through various methods such as HTTP proxy and middleware, the data content is modified before and after the web request is sent and the web response is returned * . For our needs (websites with Cloudflare installed), there is additional workload here: use the browser to decode JS captcha, stuff the authentication result cookie into each request, and handle the error status when the cookie expires. Now we know that there is no automated script that can do this work, so we need to manually decrypt the captcha in the browser and fill the cookie back into the crawler in time. This manual process may need to be completed 3 to 4 times.

Of course, some frameworks can do both jobs at the same time, such as scrapy. But let’s talk about it step by step.

Task scheduling component:

Cyotek WebCopy (final choice)

- advantage:

- GUI, I like it. The interface is clear and can clearly display the number of successful downloads, the number of errors, and the progress bar

- With the sitemap function, you can preview the sitemap before the task starts, and provide real-time feedback on the impact of crawler parameter adjustments on the crawling range.

- It is very convenient to access HTTP proxy (at least not as rubbish as httrack), which is convenient for subsequent access to other tools to modify web requests.

- shortcoming:

- There is no multithreading. I don’t know if it is a configuration problem.

- It does not support resuming uploads from breakpoints. After restarting the task, it will start crawling from the beginning, and there is no cache mechanism like scrapy and httrack.

scrapy

- Advantages: full-featured, one step in place. Whatever you want.

- shortcoming:

- You need to write code, and the workload is about 1 day to 1 week. no need.

- There is no way to decode captcha, and the advantages of automation are almost the same as none.

httrack

- Pros: GUI, I like it.

- shortcoming:

- The GUI only has parameter configuration, no monitoring of the running process, and almost no information in the log. You can’t see the progress, and you can’t see the error message. Naturally, you can’t debug it.

- Very poor customizability, requests cannot be modified. The backend is connected to the http proxy, and various methods have been tried without success.

- There is a problem with the resumable upload function, and it is difficult to debug after it is broken.

archivebox

- Currently v0.4.3 is undergoing large-scale refactoring, and a PR4 has been pigeonholed for a year. I don’t want to fix bugs for him, and I don’t want to see the code of open source projects at all.

wget

- Advantages: It has the function of caching 5 (timestamping), which means that it can continue uploading from breakpoints.

- Disadvantages: poor customization, no URL filtering function.

Request construction components:

Fiddler (final choice)

- There are Fiddler Script as the request control interface, and there are not many functions. You can use scripts to set conditions to intercept and modify requests, which is enough.

- CA certificates can be injected into the system certificate area with one click to facilitate HTTPS data hijacking. This is a unique shortcut feature that no other tool can do.

scrapy

- Need to write code. trouble.

burpsuite

- Only Java 8 is supported. ~~ The fourth brother has gone to challenge the mountains and mountains, and will not update. ~~ I only have Java 11 in my computer, so I don’t need J8 installed. And it is not clear whether burp has scripting functions.

mitmproxy

- A tool without a GUI and requiring user interaction is rubbish. mitmweb is a toy.

WebCopy

- Some request parameters (header, UA, etc.) can be modified, but the parameters cannot be dynamically modified after the task is started, which does not meet the requirements.

crawler implementation

The software used in this article, Cyotek WebCopy and Fiddler, are free:

Cyotek WebCopy Downloads - Copy websites locally for offline browsing • Cyotek

https://www.cyotek.com/cyotek-webcopy/downloads

Download Fiddler Web Debugging Tool for Free by Telerik

https://www.telerik.com/download/fiddler

Get Cloudflare Authentication Cookie

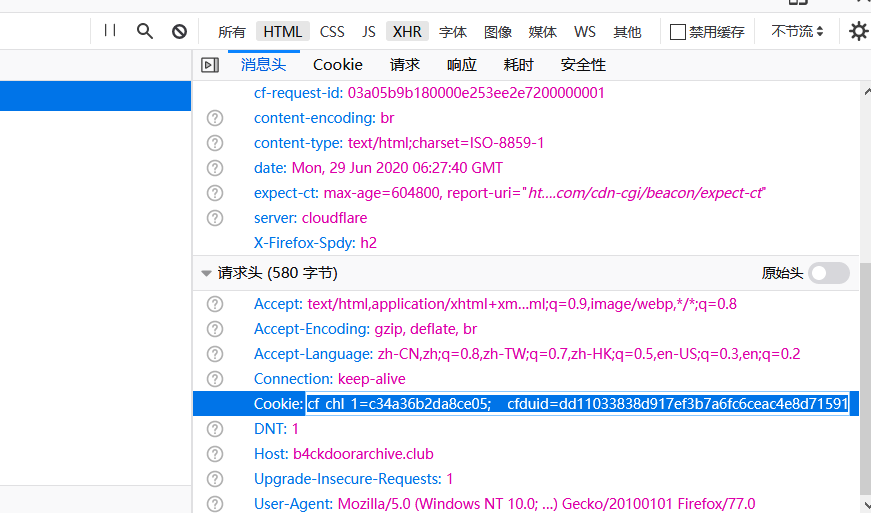

In order for the crawler to work normally, we need to manually solve the verification code of Cloudflare and record the cookie returned by the server.

First visit the page with a browser (https://b4ckdoorarchive.club/HELL-ARCHIVE ), follow the prompts to operate hCaptcha. The characteristic of Cloudflare’s verification code is that there are relatively few categories, and the more common ones are umbrellas, airplanes, boats, and bicycles. However, many pictures are taken from very strange angles and some of the subjects (for example, one picture only has the wheel of a bicycle). However, compared to the form of Google verification code, it is relatively simple.

After the verification is successful, enter the page, open the browser developer tools, refresh the page, and in the “Network” tab, click a network request and copy the Cookie value and User-Agent value for future use. This step is OK for us.

You must be proficient in this step. We may repeat the operation 3 to 4 times during the entire website crawling process. Don’t close this page and the developer tools window, save them for later use.

crawler configuration

First create a new project in WebCopy, it’s not difficult, just follow the new wizard all the way to the next step.

There are two points to note. First, click on the menu bar Project - Project Properties Go to project options.

- In the User Agent settings, set the UA of the crawler to be the same as that of the browser. You can find your own UA in any webmaster tool on the Internet or in the browser developer tools.** this step is very important ** , because CloudFlare verifies the availability of cookies via UA.

- In the Query Strings settings, check the

Strip query string segments. Because the interface we crawled is a file list page, there are several links at the top of the page for sorting, I am too lazy to write filter rules, ticking this will ensure that the files we download are only the files in the list and index.html, without miscellaneous link content.

Second, click on the menu bar Project - Proxy Server... Set the proxy server to the local port of Fiddler, the default is 127.0.0.1:8080.

Fiddler configuration

We need to use Fiddler to modify the content of the request sent by WebCopy to achieve two functions:

- Before the request is sent, insert the cookie we obtained in the first step.

- Before returning the result, check whether Cloudflare returns any error message, and if so, trigger a breakpoint to manually intervene.

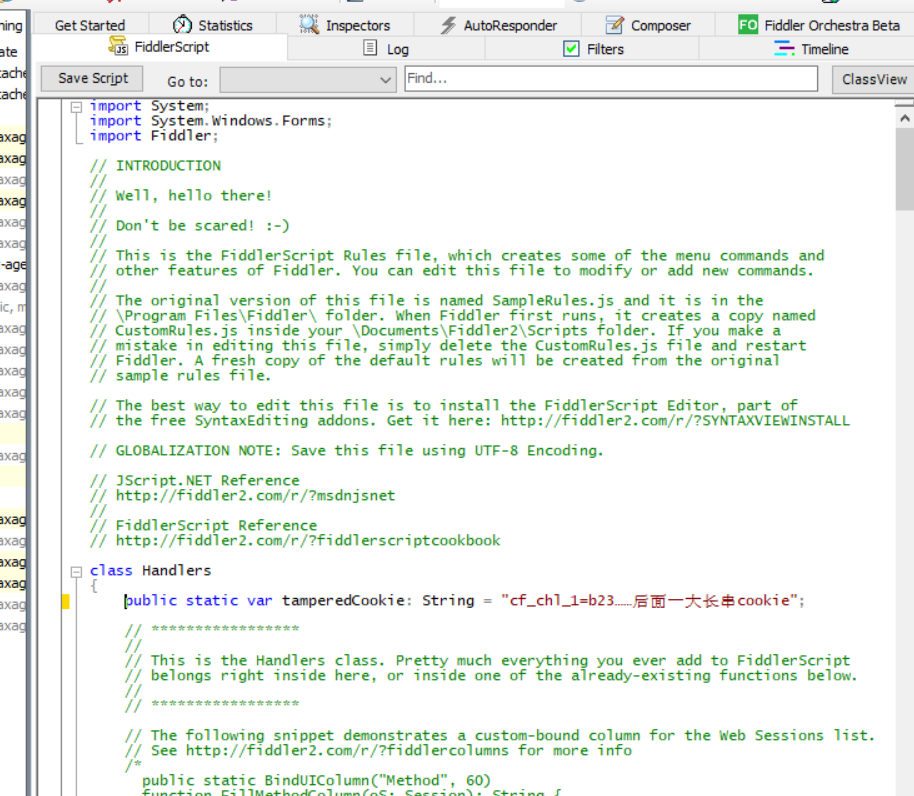

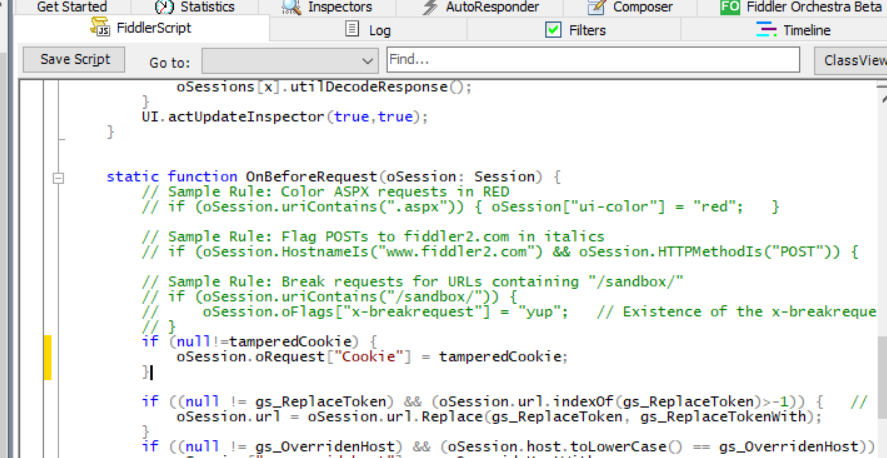

Open Fiddler and switch the right sidebar to FiddlerScript in this tab. By default, Fiddler will provide us with a code template. The code here is based on JScript.NET. We don’t need to care about the details, and we don’t need to read the documents, just follow the gourd.

Let’s first store the Cookie we just obtained, in Handler The very beginning of the class defines a tamperedCookie variable. We put it at the very beginning to make it easy to modify when the cookie expires for a while.

Next, scroll down to the class definition’s OnBeforeRequest Method, insert our modification to Cookie at the very beginning (implement function 1).

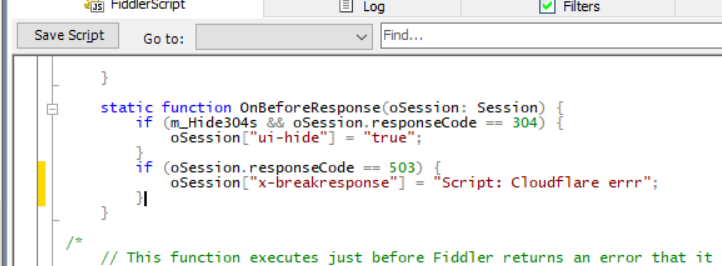

scroll down further, at OnBeforeResponse In the method, judge whether the HTTP status code is 503, if it matches, set a special field 6, Fiddler will hit a breakpoint when seeing this field, and jump out a prompt (realize function 2), we will be in the next section Explain how to modify the content of the returned data.

After all modifications are completed, click Save Script Remember to save the script.

Run and monitor crawler status

Next, click the big button on the upper right in WebCopy Copy You can start the crawler! Isn’t this crawler super convenient!

Let the progress bar go, let’s not rush. According to the author’s experience, when the progress bar is about a quarter away, Cloudflare’s cookies will become invalid. At this time, we need to get a new cookie again. Be sure to follow up and deal with it in time, otherwise the WebCopy request will time out, and it will request the next resource by itself.

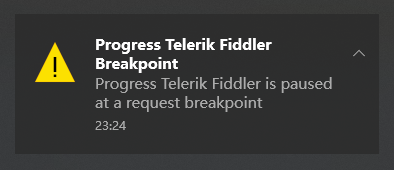

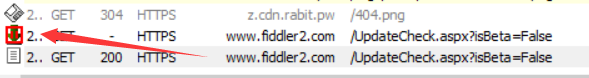

When the cookie expires, Fiddler will trigger a breakpoint when it encounters a 503 return value, and a notification similar to the one shown below will pop up.

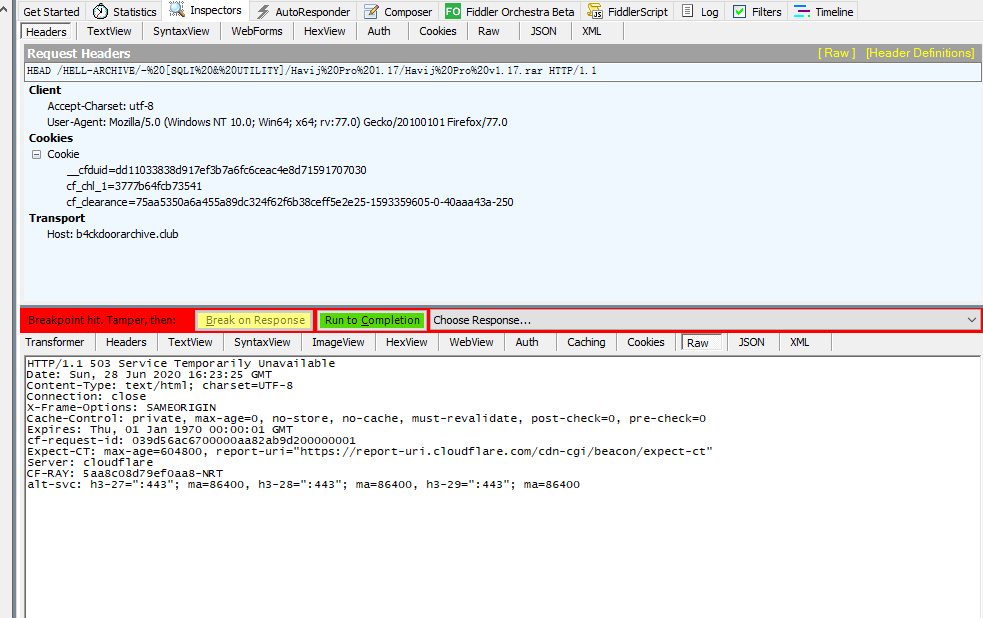

The error request content is as follows. At this time, the error request has been blocked by Fiddler, and WebCopy is in a blocked state, and it has not yet known that the request has reported an error. We changed that, and WebCopy would never know what ever happened.

We return to the browser window opened in the second step, press F5 to refresh the web page, and Cloudflare will let us complete the hCaptcha again. Complete the verification code according to the prompt, then repeat the second step, fill the updated Cookie into the variable we defined earlier in FiddlerScript, click Save Script Save the script.

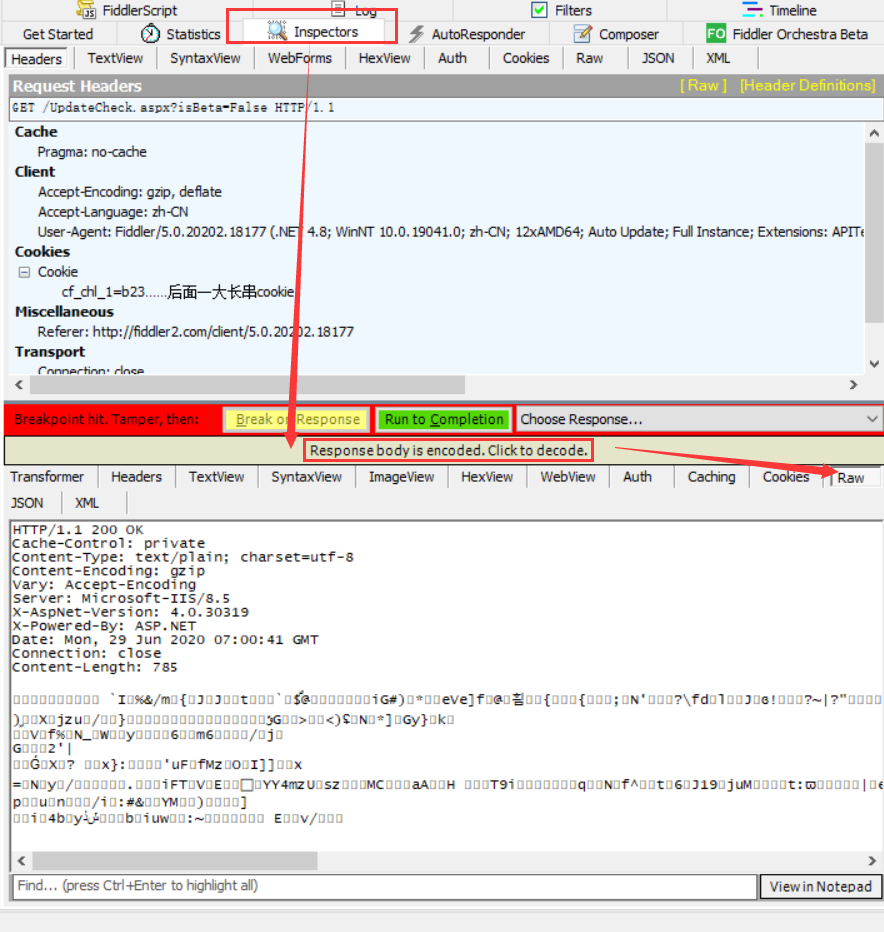

Note that the previous intercepted request is still blocked at this time. The icon of the truncated request in the list on the left side of the session is shown in the figure below. We select and press R to replay the request that was just intercepted. If there is no problem with the cookie, the status code returned by the replayed request is normal 200. We need to replace this normally returned data with the request that was just truncated.

Select the request session returned normally, and in the right window, click the following method to get the entire original HTTP data in the Raw tab, copy all the content, then select the request that was just intercepted, and paste all the content.

After the operation is completed, click the green button to release, and WebCopy will receive a normal return data.

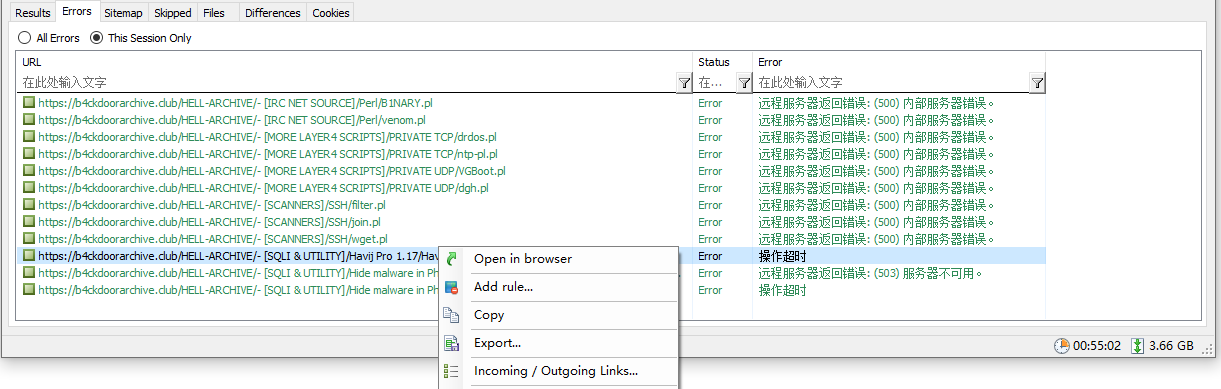

When the progress bar is finished, WebCopy will summarize the returned results and list all errors encountered by the crawler. Among them, the HTTP 500 has been verified, which is a problem with the server configuration, and the browser access cannot be downloaded. The following timeouts are caused by the slow hand of the author when taking screenshots for this article, which caused WebCopy to timeout. Of course, if you right-click the browser to open, the missing individual files can also be easily downloaded.

At this point, our reptile work is complete.

epilogue

3.66GB with a runtime of 55 minutes. It’s not that there is no code at all, but we have also seen that there is absolutely no need to write a lot of code. If I’m given two options,

- scrapy, 55 minutes to write code, 10 minutes to run.

- webcopy, 10 minutes to write code, 55 minutes to run.

Then I will definitely choose the latter! The single thread is a bit slow, and after a while, station B will pass, why is it better than coding to lose hair?

I just love GUIs, I just hate writing code! A click of the mouse is productivity!

Just drop the cover image 7:

References

-

Anorov/cloudflare-scrape: A Python module to bypass Cloudflare’s anti-bot page.https://github.com/Anorov/cloudflare-scrape ↩︎

-

codemanki/cloudscraper: Node.js library to bypass cloudflare’s anti-ddos pagehttps://github.com/codemanki/cloudscraper ↩︎ ↩︎

-

AurevoirXavier/cloudflare-bypasser-rust: A Rust crate to bypass Cloudflare’s anti-bot page.https://github.com/AurevoirXavier/cloudflare-bypasser-rust ↩︎

-

v0.4.3 (first Django release) by pirate · Pull Request #207 · pirate/ArchiveBoxhttps://github.com/pirate/ArchiveBox/pull/207 ↩︎

-

wget - The non-interactive network downloader - man pagehttps://www.mankier.com/1/wget#-N ↩︎

-

Breakpoints in Fiddlerhttps://www.telerik.com/blogs/breakpoints-in-fiddler ↩︎

-

Meme generator: Meme Templates - Imgfliphttps://imgflip.com/memetemplates ↩︎